Blogpost

Compressing global illumination with neural networks

Can neural networks assist in bringing global illumination to the web? Can they also help with adding limited dynamic global illumination? Can they do all that while keeping performance high? Dig in!

The following is a story that meanders left and right all the way until the end, but for the busy reader, the link to the demo is at the bottom of the page.

It was 5:33 AM – yeah, super early or something. But I couldn’t sleep, since there’s this thing we’ve all mostly forgotten about in the past few years. It’s called jet lag.

But SIGGRAPH had just ended the previous day and left an intoxicating buzz of ideas swirling around in there, so being awake while the world was still just booting up, was a great way to give ample room for those thoughts to grow and morph into more concrete shapes.

It was my first time attending the yearly pilgrimage for computer graphics witches and wizards, and among the things that impressed me the most was just how singular the results of extensive digging (also called research and development) by an expert, or a team of experts, can be. And sure this is intuitive for just about any human endeavour, but in computer graphics, this process and steps along the way are also exceptionally visual, almost tangible. If the digging came to a halt at any one of the many incremental interim steps towards say, real-time volumetric clouds or realistic embroidery, the results would be quite inferior. The good people at SIGGRAPH simply rarely stop.

And so on that morning, I was thinking about problems that would require a lot of such digging (you know, for fun), and I was thinking about the limitations of web graphics, about mobile graphics, about global illumination, and about how smooth diffused light sometimes is and how we could possibly approximate that while keeping performance high and... Hey, wait a second! It is smooth! I wonder if we could describe some qualities of diffused light with simple math. Not simple math like that in the rendering equation, which ends up eating computation for breakfast, but simple like y = kx + n and its high-school friends like y = sin(x). And in fact, at that particular moment, I was looking at light from the hotel room’s bedside lamp that bounced from a wooden surface back onto the wall and formed this lovely soft sinusoidal shape. If you took the surface of that wall, I bet you could construct some simple function that would describe the colours shown there. So I tried! And yeah, getting that initial shape is not too tricky, playing with the parameters of a polynomial, but then you still need to blur the edges in a very particular way and that’s quite a bit more challenging – Argh! We’re getting nowhere like this.

Oh. Wait.

I do know of a certain statistical method that’s pretty darn good at adjusting and optimising many many parameters.

And so a new entry appeared in my notes:

What if we could train a very small neural network with all the different lighting scenarios for a specific scene, and do inference in real-time to get the desired fragment colour?

I closed my laptop and started to pack my bag. It was time to leave Vancouver behind and fly back home to Ljubljana.

The start of the dig

Now some of you reading this likely have a background in machine learning or computer graphics or both, and regardless of which of these groups you belong to, surely you’re thinking this is crazy. And with good reason too! You’re thinking light can behave in very complex ways, so there is practically no chance of teaching a small neural network all of that behaviour for even a simple scene. And you’re right, of course! But see, being a newbie I didn’t know that. I just thought about the smoothness of that diffused light I observed – and started to dig.

As with all good digs, this one also started with a literature search. Turns out neural networks have already been used for global illumination: Thomas and Forbes have shown that using a GAN they can compete with VXGI in terms of quality and performance. So you’re telling me it is possible! Unfortunately, that result was for 256 pixels times 256 pixels image and on a massive GPU. Something more performant was needed, something that could run in a browser at 60 fps on a mobile device that’s getting fairly long in the tooth - and a 16-layer neural network definitely wasn’t it.

Getting something that performs well while keeping the quality high was going to be an issue, though. While that paper showed that it is possible for large networks with tons of parameters to accurately approximate a scene’s global illumination, it also showed that smaller networks produced results of very low quality. And so the dream of teaching a network about all the different dynamic aspects of a scene (lights and objects moving and rotating, etc.) while also keeping it nimble was dissipating quickly.

Well... that sucks! Feels like a dead end. But you know what, maybe we should keep digging.

The dig

It is now clear that this lunch won’t be free, so limiting the buffet options could be a good idea. What if we tried to merely replace a single static baked lightmap with a neural network, without any dynamic component at all? Just so we get our bearings. And how does one even represent images using neural networks? Enter, stage left, Sitzmann et al. and their "Implicit Neural Representations with Periodic Activation Functions" paper from 2020, in which they describe using sine as activation functions resulting in a network that is "ideally suited for representing complex natural signals and their derivatives." Hey, that sounds like exactly what we need!

So I got back to digging, setting up a Colab notebook, adding a custom activation function to a Keras model layer, testing how many sine operations I could squeeze into a fragment shader to help choose the depth and width of the neural network, and got to training. With x and y as inputs, red, green and blue as outputs, and only 4 hidden layers with 32 neurons each, this worked exceptionally well for a single image. So well, in fact, it felt like much more information could be stuffed in there!

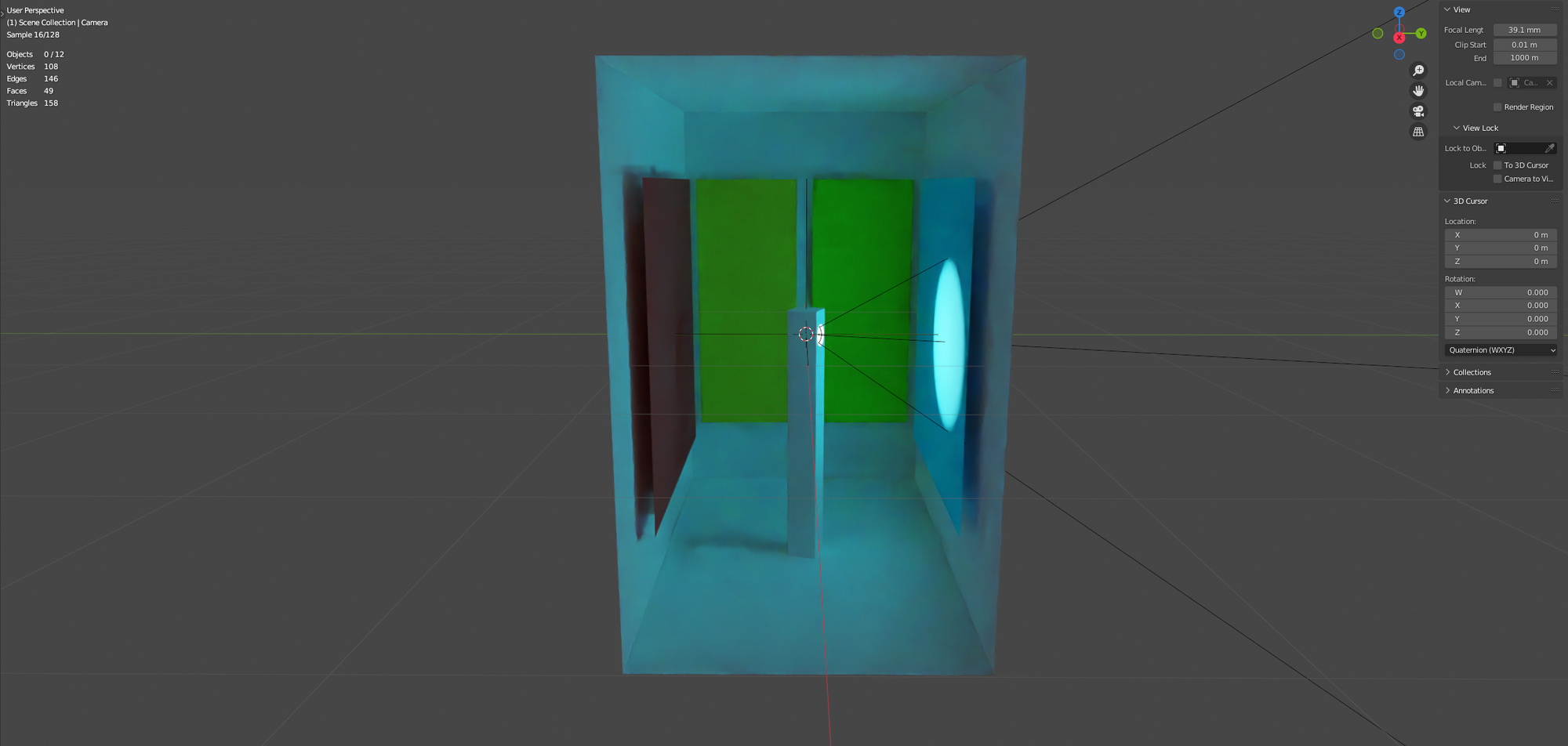

So I modelled a scene in Blender with one rotating light, wrote a script to bake the diffused light for a single surface (namely the coloured plane on the left at the back of the box) 360 times, once for each degree of the light’s rotation around a single axis, and added an additional input for that rotation, ω, and got to training.

The result can be seen in the video below – The first 10 seconds are the network’s behaviour outside of the trained input range (specifically ω goes from -1.0 to 0.0), then 10.0s to 20.0s represents the diffuse light while the light in the scene goes from 0 to 360 degrees (ω from 0.0 to 1.0). And after those 20.0s the network goes back to dreaming about undulating velvety coloured hills and valleys, as the inputs slide out of range again (ω goes beyond 1.0).

In only 52kB of GLSL we were able to represent the diffused light that is the result of a light rotating 360 degrees and its rays bouncing off the various coloured surfaces in the scene.

This means that even though the initial dream of a fully dynamic scene isn’t achievable (yet?), there is merit to the idea of neural networks representing diffused light with some dynamic components.

Alright, let’s keep digging then.

Putting it all together

The scene I modelled consisted of 9 other surfaces, which I then baked in the same way, and trained in the same way to eventually produce 10 individual fragment shaders, one per surface. Since the networks represented diffused light only, we have to combine their output with Three.js’s direct lighting (and shadowing) capabilities.

The final result can be seen in the video below:

What you see here is a 748 x 1194 video, meaning that there are 893,112 pixels shown. Each of those pixels is doing inference on a 3,395 parameter network, 60 times per second (and this also runs at 60 fps on an iPhone Xs Max).

The demo also compiles to roughly 600 kB of JS, of which 400 kB are dependencies like Three.js, react-three-fiber and drei. Two hundred kilobytes is not a bad size for 10 neural networks and the required shader code, giving us resolution independent rendering of diffused light for one light rotating around its axis. As a comparison, the baked images used as training data added up to 1.8 GB. Additionally, it’s hard to argue with the fact that the result simply looks good.

Caveats

But argue we will! So, what’s the catch? As you can probably already tell, there are quite a few, and I’ll list them here just before the links to the demo code and shaders, so we’re all on the same page, literally:

- Each of these shaders takes a whopping 15 seconds to compile on browsers that use ANGLE as their WebGL backend on Windows. In practice, that means that your browser will freeze for minutes before these shaders are compiled for even a simple scene like the demo. This can be remedied by using the OpenGL backend in both Firefox and Chrome (see this discussion) and is not an issue at all on a Mac (compiles very quickly), but it’s a real bummer. Maybe someone could look into that?

- The dynamic component’s light impact needs to be sampled (e.g. by baking 360 images for a light’s rotation, baking 100 images of an object’s movement, baking multiple images of changing material properties and so on) and then all of the surfaces in a given scene have to be trained for those conditions. This can be a laborious and computationally intensive process, even though it only happens once at build time.

- Depending on what your scene is like, it might have complex diffused light qualities that exceed the capabilities of this small network (and will thus appear blurry). Some of this can be visible as artifacts on the rotating light’s stand, as in that case its 5 visible surfaces were all mapped to a single image, resulting in qualities that the network struggled to learn.

Demo

First, here’s a link to the shader for a single surface, where you can look under the hood, so to speak. It also allows you to get a feel for how the individual parameters affect the final output so feel free to play around (it can be surprising!):

And for the assembled demo, here’s a link to a CodeSandbox, so that you can easily take it apart: https://codesandbox.io/s/exploring-gi-with-nn-lyfq9e.

Future

Given the mentioned constraints it is unlikely that this technique in its current state will be useful beyond a very narrow scope of projects, but I firmly believe that making pixels smart is just beginning to show its potential. The ability to successfully embed a useful neural network in a fragment shader and still have it perform well was quite a surprise for me. Other than baking-type scenarios, one could also imagine, for example, a shader in a path tracing renderer that is trained for a specific dynamic scene to output directions of bounced light towards objects that are likely to have a major contribution toward the final pixel colour, instead of sampling the scene blindly. Or a shader for caustic effects in water, that’s trained on fully ray-traced images, with the current phase of the waves above as input. What could you use it for?

Let's get back to digging.